TASK ORDER NO. HHSP23337007T

CONTRACT NO. HHSP23320095634WC

Submitted to:

Hui-Hsing Wong

Amber Jessup

U.S. Department of Health and Human Services

Assistant Secretary of Planning and Evaluation (ASPE)

200 Independence Avenue, SW

Washington, DC 20201

Submitted by:

Aylin Sertkaya

Anna Birkenbach

Ayesha Berlind

John Eyraud

Eastern Research Group, Inc.

110 Hartwell Avenue

Lexington,

MA 02421

www.erg.com

Pharmaceutical companies conduct clinical trials for many reasons. The most obvious goal of clinical trials is to demonstrate safety and efficacy to gain Food and Drug Administration (FDA) approval. FDA provides guidance to developers about what constitutes acceptable clinical trials and appropriate outcomes. Improving the drug development process, especially by conducting better (meaning providing more information on safety or efficacy) and faster clinical trials, can foster innovation in medical product development.

The primary purposes of this study: 1) to better understand sponsors' strategies in the design and execution of clinical trials, 2) to identify factors that may delay, hinder, or lead to unsuccessfully completed trials, and 3) to develop an operational model of clinical trial decision-making to enable examination of what-if scenarios by end-users.

This study models the decision-making process for a drug sponsor as a stylized decision tree that looks at the process for formulating a clinical trial from the point of view of an expected-revenue-maximizing sponsor in the face of uncertainty (or risk). The simplified clinical decision-making model incorporates the following considerations:

- Therapeutic area

- Potential market size/revenues for the drug

- Clinical stage

- Success probabilities by clinical stage

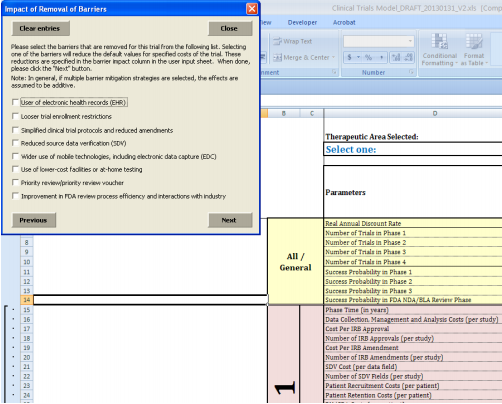

In addition to identifying the costs of the clinical trials, the following barrier mitigation strategies were analyzed:

- Use of electronic health records (EHR)

- Looser trial enrollment restrictions

- Simplified clinical trial protocols and reduced amendments

- Reduced source data verification (SDV)

- Wider use of mobile technologies, including electronic data capture (EDC)

- Use of lower-cost facilities or at-home testing

- Priority Review/Priority Review vouchers

- Improvements in FDA review process efficiency and more frequent and timely interactions with FDA

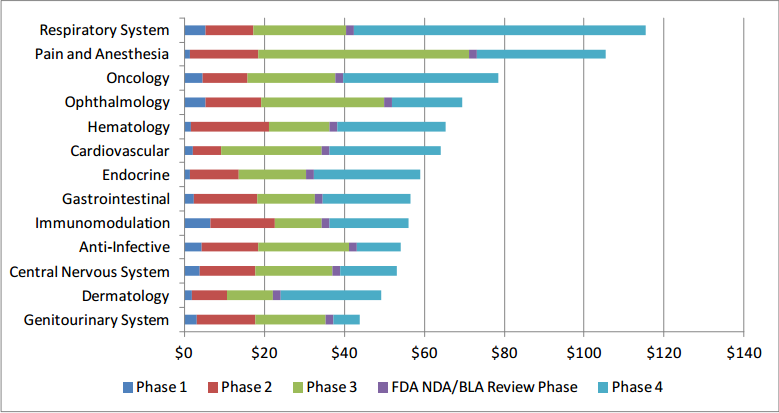

Overall, the therapeutic area with the highest clinical research burden across all phases is respiratory system ($115.3 million) followed by pain and anesthesia ($105.4 million) and oncology ($78.6 million) trials. Use of lower-cost facilities/in-home testing and wider use of mobile technologies appear to be most effective in reducing costs across therapeutic areas and trial phases. Use of lower-cost facilities and/or in-home testing can reduce per-trial costs by up to $0.8 million (16 percent) in Phase 1, $4.3 million (22 percent) in Phase 2, and $9.1 million (17 percent) in Phase 3, depending on therapeutic area.

"Acknowledgments

We gratefully acknowledge Hui-Hsing Wong (ASPE) and Amber Jessup (ASPE) for their leadership, guidance, and input throughout this study. We also would like to thank Patrick Archdeacon (FDA) and Trini Beleche (FDA) for their insightful comments and advice. Medidata Solutions provided the data for the analysis of clinical trial costs. We would like to thank Lori Shields, Frank Cattie, Rafael Campo, and Joshua Hartman at Medidata Solutions for accommodating our data requests and answering our questions.

We also would like to acknowledge the contributions of our subject matter experts, Michael Silverman, Sheldon Brookman, and David Bristol (independent consultants to ERG) to formulating our interview questions and the clinical trial decision-making parameters.

Many people involved in clinical research, including pharmaceutical/biopharmaceutical company representatives, CRO personnel, and industry experts, provided valuable information for the study. We are grateful to all of them for sharing their expertise and experiences with us.

DISCLAIMER

This report was prepared by ERG, under contract to the Assistant Secretary for Planning and Evaluation. The findings and conclusions of this report are those of the author(s) and do not necessarily represent the views of ASPE, FDA or HHS

List of Acronyms

Acronym Meaning

Executive Summary

E.1 Clinical Trial Decision-making Model

- Therapeutic area,

- Potential market size/revenues for the drug, and

- Clinical stage (Phase 1, Phase 2, Phase 3, and Phase 4) costs that are dependent on a variety of factors, including but not limited to:

- Physician and nursing (RN) costs;

- Number of patients needed for the desired statistical precision;

- Number of Institutional Review Boards (IRBs) involved;

- Number of investigator sites;

- Cost of clinical data collection, management, and analysis; and

- Cost of clinical procedures.

- Success probabilities by clinical stage

The decision tree adapted from Damodaran (2007) specifies the phases (1 through 4), the development revenue/cost at each phase, success/failure probability for each phase, and the marginal returns associated with each step. Since it takes time to go through the different phases of development, there is a time value effect that is built into the expected returns computation. In the model, we compute the expected net present value at the decision point by working backwards through the tree.

E.2 Analysis of Costs

The model uses detailed cost information made available by Medidata Solutions, a global provider of cloud-based solutions for clinical research in life sciences. The cost information is specific to the U.S. and presented by therapeutic area and clinical trial phase. Key findings with respect to costs include the following:

- Overall, the therapeutic area with the highest average per-study costs across Phases 1, 2 and 3 is pain and anesthesia ($71.3 million) followed by ophthalmology ($49.9 million) and antiinfective ($41.3 million) trials. Conversely, trials in dermatology, endocrinology, and gastroenterology have the lowest overall costs across the same three phases.

- Average per-study costs across all therapeutic areas increase as clinical development proceeds from Phase 1 to Phases 2 and 3. Average Phase 4 study costs are equivalent to those of Phase 3 costs but are much more variable across different therapeutic areas than Phase 3 costs.

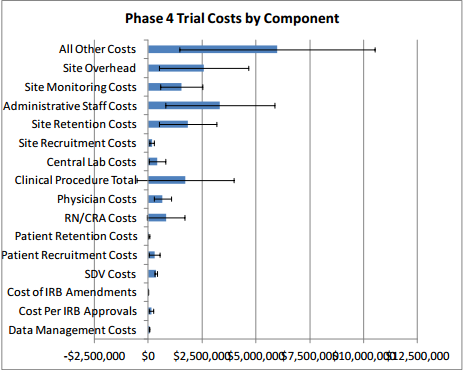

- Overall, the factors that contribute the most to costs across all trial phases include Clinical Procedure Costs (15 to 22 percent), Administrative Staff Costs (11 to 29 percent), Site Monitoring Costs (nine to 14 percent), Site Retention Costs (nine to 16 percent), and Central Laboratory Costs (four to 12 percent).

E.3 Barriers to Clinical Trials

E.3.1 High Financial Cost

- Studies estimate that it now costs somewhere between $161 million and $2 billion to bring a new drug to market.

- The aging of a larger segment of the population has resulted in a shift to chronic and degenerative disease research and an ensuing increase in development costs. Nonetheless, many companies pursue drugs for chronic diseases to have a large and steady revenue stream. Drugs for shorter-term conditions are less attractive to drug sponsors and their investors because it is less likely that the high costs of development will be recouped through revenues and earn a profit.

E.3.2 Lengthy Timelines

- According to one study, the average length of time from the start of clinical testing to marketing is 90.3 months (7.5 years).

- Longer timelines increase costs and decrease revenues.

- Longer studies are needed to see if any safety issues arise when drugs are taken long-term to manage chronic diseases.

- The “one-off” nature of trial organization protracts trial initiation timeframes.

- The clinical trial business model has not kept pace with potential for efficiency gains through technological advances or centralized coordination.

E.3.3 Difficulties in Recruiting and Retaining Participants

- Patient recruitment requires a substantial investment of time and money.

- Failure to recruit can cause costly delays or trial cancellation, wasting resources.

- There is competition for limited patient pools for certain conditions, such as rare cancers and multiple sclerosis.

- Clinical trial sites are often selected based on the location of investigators rather than patients.

- Knowledge, attitudes, and incentives of potential participants and their physicians hinder participation.

E.3.4 Increasing Competition for Qualified Investigators and Sites

- According to some, there is a shortage of biostatisticians and informaticists across academic medicine, industry, and government; others say researchers exist but are difficult to find, often due to competition. There is more widespread agreement that there is a shortage of investigators who can enroll high-quality patients. There is also competition for qualified sites, especially in popular therapeutic areas.

- The rate of attrition among U.S. investigators is increasing.

- The clinical investigator career track is unattractive to researchers.

- It is difficult for new sites to attract business, as sponsors tend to use clinical research organizations (CROs) they know.

- For specialized areas such as anti-fungals, sponsors may have a very small number of qualified investigators to choose from.

E.3.5 Regulatory and Administrative Barriers

- U.S. regulations pertaining to clinical research could benefit from revisions. They were written at a time when the clinical trials enterprise was smaller and before multicenter trials became common.

- Ethical / Institutional Review Board (IRB) Approval (21 CFR 56)

- There is often a lack of clarity regarding the roles and responsibilities of various oversight bodies and what is expected of investigators.

- If the IRB process results in a request for changes to a trial, investigators may lack the resources to fulfill the request.

- Regulations vary by geographic location.

- Informed Consent (21 CFR 50) – The process of obtaining informed consent from trial participants is lengthy.

- Patient Privacy: U.S. Health Insurance Portability and Accountability Act (HIPAA) (45 CFR Part 160 and Subparts A and E of Part 164) – HIPAA requires patient authorization to use their health information for research. There are severe penalties for violating HIPAA, so IRBs enforce compliance. One result of HIPAA and other privacy laws is that site investigators are reluctant to attempt to contact patients to follow up on major outcomes if the patient drops out. This in turn reduces statistical power.

- Regulations Governing Clinical Trial Conduct – Regulations governing the conduct of clinical trials were devised when trials were smaller and involved fewer sites.

- Regulations Governing Serious Adverse Events (SAEs) Reporting for Investigational New Drugs and Biologics (INDs) (21 CFR 312) – In the past, FDA and investigators in multicenter trials have been flooded with expedited reports of serious adverse events which lack sufficient context from the aggregate data to be interpretable. A new safety reporting regulation (effective March 2011) may remedy this problem, but it is too early to tell.

- Regulations for Multiple Jurisdictions – Local, regional, national, and international regulations/guidances are numerous and not always well harmonized.

- Inadequate Clarity/Consistency/Practicality in FDA Guidance

- Delays can be caused by differing interpretations of regulations by the various parties involved in multicenter trials.

- Guidance is lacking for newer therapeutic areas or classes.

- In disease areas where guidelines are nonexistent, old, or otherwise lacking, sponsors find it difficult to understand FDA expectations before beginning their studies.

- FDA is understaffed and underfunded and the available resources end up being overtaxed.

E.3.6 Drug Sponsor-Imposed Barriers

- Excessive risk-aversion leads to unnecessary steps being taken.

- In multicenter trials, uncertainty and inconsistent enrollment success across sites creates a need to over-enroll and plan trials “defensively.”

- Internal review processes for organizations conducting/sponsoring clinical trials can delay a trial’s start.

- In trying to create a pure scientific experiment (to maximize likelihood of drug approval), sponsors may restrict enrollment using extensive eligibility criteria that may exclude, for example, people on other medications or with comorbidities. These constraints on enrollment make it even more difficult to find a sufficient number of participants and protract the recruiting process.

- Industry sponsors generally do not involve site investigators in the protocol design process, so the required procedures may not be easily integrated into clinical practice at the sites.

- Clinical trial protocols are increasingly complex (with more assessments, exploratory endpoints, biomarkers, biopsies, etc.), increasing the administrative burden of trials.

- More complex Case Report Forms (CRFs) including many data points can significantly increase trial monitoring costs.

- Sponsors unnecessarily collect data that may not even be relevant to the specific study.

- The lack of standardized CRFs and trial procedures across study sites can result in improperly conducted procedures or inadequate data collection at some sites.

- According to a Tufts Center for the Study of Drug Development (CSDD) study, nearly 60 percent of all trial protocols require amendments, a third of which are avoidable.

- Industry-sponsored trials are generally monitored through site visits that take place at intervals defined by standard operating procedures or study-specific monitoring plans. It is common practice to conduct site visits frequently, and source data verification (SDV) is a time-consuming part of these visits.

- Legal advisors have traditionally encouraged sponsors to be conservative in their reporting of unexpected SAEs (at least prior to March 2011, when a new drug safety reporting regulation was implemented).

E.3.7 Disconnect Between Clinical Research and Medical Care

- Community physicians are largely uninvolved in the clinical research process.

- Many healthcare professionals do not receive training in research methods.

E.3.8 Barriers at Academic Institutions

- Sponsors might be compelled to select academic centers as sites due to the presence of key opinion leaders or specific patient populations.

- Ethical and Regulatory Requirements

- Academic institutions can take their responsibility to provide ethical and regulatory oversight to extremes and create excessive barriers to conducting clinical trials.

- One study found that the average number of steps necessary to open a clinical trial at academic centers was over 110, in contrast to fewer than 60 steps at non-academic centers.

- Low Priority of Clinical Research in Academic Institutions

- Many academic medical centers undervalue or fail to incentivize clinical research.

- Fundamental principles of clinical research are not included in academic medical curricula at the graduate or undergraduate level.

- Those studying to be physicians are not adequately trained to interpret clinical trial results, impairing their ability to use such results to inform their clinical care and practice evidence-based medicine. For example, in a survey of 367 residents only 37.4 percent knew how to interpret an adjusted odds ratio from a multivariate regression analysis.

E.3.9 Barriers Related to the Globalization of Clinical Research

- The clinical research footprint is shifting overseas.

- There are a number of factors, including cost savings and shorter timelines, driving this shift and making it cheaper and easier to conduct trials outside the U.S.

- Ethical and scientific concerns may arise when conducting studies in other countries.

- Conducting trials at multiple sites across different countries magnifies the barriers associated with multicenter trials.

E.4 Analysis of Barriers to Clinical Trials

- Use of electronic health records (EHR)

- Looser trial enrollment restrictions

- Simplified clinical trial protocols and reduced amendments

- Reduced source data verification (SDV)

- Wider use of mobile technologies, including electronic data capture (EDC)

- Use of lower-cost facilities or at-home testing

- Priority Review vouchers

- Improvements in FDA review process efficiency and more frequent and timely interactions with FDA

Our analysis suggests that priority review vouchers and improvements in FDA review efficiency can help to shorten timelines, which in turn increase the expected net present value (eNPV) to the drug sponsor. Because these options affect the final stage of clinical research (mainly NDA/BLA approval), heir overall dollar value for a sponsor at the start of clinical research is much lower due to discounting. Therefore, holding everything constant, these options may be less appealing as strategies to stimulate drug development than alternatives which substantially lower costs early on in the clinical research process. Use of lower-cost facilities/in-home testing and wider use of mobile technologies appear to be most effective in reducing costs across therapeutic areas and trial phases. Use of lower-cost facilities and/or inhome testing can reduce per-trial costs by up to $0.8 million (up to 16 percent of cost per study) in Phase 1, $4.3 million (up to 22 percent of cost per study) in Phase 2, and $9.1 million (up to 17 percent of cost per study) in Phase 3, depending on therapeutic area. Wider use of mobile technologies can result in very similar maximum savings; $0.4 million (up to eight percent of cost per study) in Phase 1, $2.4 million (up to 12 percent of cost per study) in Phase 2, $6.1 million (up to 12 percent of cost per study) in Phase 3, and $6.7 million (up to 13 percent of cost per study) in Phase 4. On the other hand, loosening trial enrollment restrictions and reducing SDV efforts have smaller impacts on costs, resulting in maximum savings of less than $0.1 million to $0.2 million per trial, representing approximately one percent of perstudy costs in Phases 2 and 3.

1 Introduction and Background

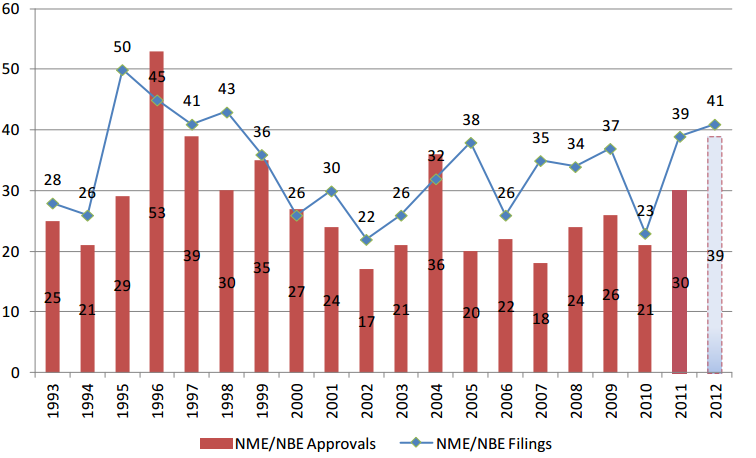

In calendar year 2012, Food and Drug Administration (FDA) Center for Drug Evaluation and Research (CDER) approved 39 novel new drugs (i.e., new molecular entities (NMEs) and new biological entities (NBEs), including both novel drugs and biologics).1 While 39 approvals marks the highest number of NMEs/NBEs approved since 2004, drug companies are not filing as many applications with FDA for new drug approvals as they have in the past. Over the past 10 years (2003 to 2012), the number of NME/NBE approvals per year has fallen from the previous decade’s average of 30 to 25.7 (see Figure 1). The average yearly number of NME/NBE filings has also fallen slightly over the same time period. A reduction in the drug application pipeline means fewer novel therapies in future years.

Figure 1: New Molecular Entity (NME) and New Biologic Entity (NBE) Filings and Approvals

Source: (U.S. Food and Drug Administration, 2013; Jenkins J. K., 2011) Notes: CDER data as of 11/30/2012. Since applications are received and filed throughout a calendar year, the filed applications in a given calendar year do not necessarily correspond to an approval in the same calendar year. Certain filed submissions are within their 60-day filing review period and may not be filed upon completion of the review.

In 2004, to help drive new drug development and increase applications for novel new products, FDA launched its Critical Path Initiative, a strategy to help advance pharmaceutical innovation. Further, in 2011, Secretary Sebelius identified as one of the priority goals of the HHS, “accelerating the process of scientific discovery to patient care,” which includes building a national network of clinical research centers to enable clinical trials of promising compounds.

Developing a new drug is a costly endeavor and the ever-increasing cost of clinical research is often cited as one of the main reasons for the slowdown in FDA application filings. It takes approximately 10 to 15 years to bring a new drug from the laboratory to the pharmacy shelf (English, Lebovitz, & Giffin, 2010). During the initial years of non-clinical testing, the sponsor completes synthesis and purification of the drug and conducts limited animal testing. Approximately one out of one thousand compounds in preclinical testing appears promising enough to induce the sponsor to file an Investigational New Drug (IND) application (Eisenstein, et al., 2004). If the FDA reviews the IND and determines that it is reasonably safe to proceed, the sponsor then initiates the first phase of clinical research.

The clinical drug development stage consists of three phases. In Phase 1, clinical trials using healthy individuals are conducted to determine the drug’s basic properties and safety profile in humans. Typically, the drug remains in this stage for one to two years (DiMasi, Hansen, & Grabowski, 2003). In Phase 2, efficacy trials2 begin as the drug is administered to volunteers of the target population. At the end of Phase 2, the manufacturer meets with FDA officials to discuss the development process, continued human testing, any concerns the FDA may have, and the protocols for Phase 3, which is usually one of the most extensive and expensive parts of drug development. According to one source, mean phase lengths are 21.6 months (1.8 years) for Phase 1, 25.7 months (2.1 years) for Phase 2, and 30.5 months (2.5 years) for Phase 3 (DiMasi, Hansen, & Grabowski, 2003). Once Phase 3 is complete, the manufacturer files a New Drug Application (NDA). The period between completion of Phase 3 and drug approval typically lasts one to two years; including six to 10 months for the NDA review itself (or more if the drug is not approved after the first review). Toward the end of the NDA review stage, FDA and the drug sponsor meet with an advisory committee made of experts to present data and solicit advice on drug safety, effectiveness, and labeling. Once approved, the drug may be marketed in the U.S. with FDAregulated labeling (Lipsky & Sharp, 2001). Sometimes additional studies are conducted following FDA approval, during general use of the drug by medical practitioners. These studies are referred to as Phase 4 studies in this study but are also known as post-marketing studies (Lipsky & Sharp, 2001).

The increasing cost of clinical research has significant implications for public health as it affects drug companies’ willingness to undertake clinical trials. Some researchers (Collier, 2009) argue that the rising clinical trial costs have made the industry as a whole more risk averse and less willing to take chances on novel medicines. Many drug companies are now conducting clinical trials in other countries, such as China and India, where costs can be as much as 60 percent lower. Clinical research centers are also more closely scrutinizing the types of clinical trials they will take on, with the fear that certain projects could put the center in a deficit (Collier, 2009). To increase clinical trial efficiency and reduce costs, companies have been looking at establishing effective surrogate endpoints3—as opposed to clinical endpoints, which take longer and are more difficult to monitor—to assess failures before moving to costly Phase 3 trials. They are also looking for ways to move more rapidly to electronic data capture (EDC). To improve the recruitment process, drug companies are also investigating the use of genetic markers as a way of screening who the product is most likely to be effective with and who is likely to have significant side effects before accepting human subjects into studies.

Clinical trials can be sponsored by a variety of organizations, including industry, government agencies such as the National Institutes of Health (NIH), universities, and clinical research networks. Drug companies conduct clinical trials for a variety of reasons, including demonstrating safety and efficacy for new compounds, expanding the list of indications for previously approved compounds, improving market position by demonstrating superiority to other existing compounds, increasing the amount of safety and efficacy evidence for payer reimbursement, among other things.

This study examines the decision-making process for those clinical trials that are:

- Designed to demonstrate safety and efficacy for new compounds, and

- Sponsored by industry.

The primary objectives of the study are: 1) to better understand sponsors’ strategies in the design and execution of clinical trials, 2) to identify factors that may delay, hinder, or lead to unsuccessfully completed trials, and 3) to develop an operational model of clinical trial decision-making to enable examination of what-if scenarios by end-users.

1 The number represents applications for New Molecular Entities (NMEs) filed under New Drug Applications (NDAs) and therapeutic biologics filed under original Biologic License Applications (BLAs).

2 According to a technical review prepared for the Agency for Healthcare Research and Quality (AHRQ), the distinction between efficacy and effectiveness trials is defined as follows: “Efficacy trials (explanatory trials) determine whether an intervention produces the expected result under ideal circumstances. Effectiveness trials (pragmatic trials) measure the degree of beneficial effect under “real world” clinical settings” (RTI International, 2006).

3 While clinical endpoints are target outcomes that are measured directly (such as deaths), surrogate endpoints are intended to show the effect of the drug on a physiologic process or marker that is strongly correlated with a particular disease. For instance, CD4 cell counts might be used to assess the effectiveness of an antiviral medication in treating patients with human immunodeficiency virus (HIV) (Lipsky & Sharp, 2001).

2 Clinical Trial Decision-making Model

The existing literature on clinical trials primarily discusses the process of performing clinical trials—including statistical design issues and coordination problems among multiple centers and disciplines—and results, but few sources offer insights regarding the decision process of the sponsor (Hammons, Hilman, Kahan, & Neu, 1985). From the perspective of a drug sponsor operating under uncertainty, we postulate that the decision to undertake a clinical trial to demonstrate safety and efficacy is likely influenced by a variety of factors including:

- Potential market size for the drug, which in turn depends on:

- Type of condition(s) (acute versus chronic, severity) the drug would treat;

- Size of the patient population (current and future);

- Number of existing drugs currently on the market that treat the same condition(s) and advances in treatment;

- Existing incentives, such as the Orphan Drug Act, and fast track FDA review that affect how quickly the drug can be brought to market and offer financial incentives;

- Clinical stage (Phase 1, Phase 2, and Phase 3) costs that are dependent on a variety of factors, including but not limited to:

- Patient accrual rates that depend upon selection criteria, the relative ease of screening eligible patients, and physician interest;

- Administrative, physician, registered nurse (RN), and clinical research associate (CRA) capacity (i.e., number of protocols per RN/physician, number of patients per RN/physician);

- Number of patients needed for the desired statistical precision;

- Number of protocols;

- Number of institutional review boards involved;

- Number of investigator sites and their locations;

- Cost of clinical data collection, management, and analysis;

- Technologies for data collection and verification;

- Projected manufacturing costs upon FDA approval which would be influenced by whether the drug is a small molecule or a biologic.

The decision process for pursuing a clinical trial is also likely to vary by type of sponsor. A large established pharmaceutical company with deep pockets may be less risk averse and better positioned to undertake costly clinical trials whereas a small emerging company may find it difficult to allocate funding to clinical research, especially if the trials require a large patient population and multiple sites.

Further, some of the clinical trial pathway formulation decisions, such as which indication within a therapeutic category to target and how to time/sequence the trials, are inextricably linked to business realities. Research objectives coupled with financial circumstances can force a sponsor down a specific clinical trial pathway. For example, large established pharmaceutical companies often try to allocate resources based on the research portfolio and the potential to market the product. In contrast, small companies can be focused on whether they can finance the development and up to what point. Small companies are also often subject to results pressures from their investors, which can lead to inappropriate or “short-cut” development approaches.

The approach adopted in this study looks at the decision process from the point of view of an expected-revenue-maximizing sponsor in the face of uncertainty (or risk). As described in the following sections, the simplified clinical decision-making model incorporates the following considerations:

- Therapeutic area,

- Potential market size/revenues for the drug, and

- Clinical stage (Phase 1, Phase 2, Phase 3, and Phase 4) costs that are dependent on a variety of factors, including but not limited to:

- Physician and RN costs;

- Number of patients needed for the desired statistical precision;

- Number of IRBs involved;

- Number of investigator sites;

- Cost of clinical data collection, management, and analysis; and

- Cost of clinical procedures.

- The following sections describe the data sources used (Section 2.1) in constructing the model, the conceptual framework (Section 2.2), the operational model (Section 2.3), and the model parameters (Section 2.4) in further detail.

2.1 Data Sources

In constructing an operational model of clinical trial decision-making, we compiled information from a variety of sources, including:

- Publically available literature;

- Interviews with experts, FDA personnel, drug sponsors, clinical research organizations (CROs) as well as major academic clinical research centers;

- April 2012 FDA public hearing on the subject of Modernizing the Regulation of Clinical Trials and Approaches to Good Clinical Practice;

- Medidata Solutions databases;

- Medidata Grants Manager® (PICAS® )

- Medidata CRO Contractor® (CROCAS® )

- Medidata Insights™

More information on the above data sources are provided below

2.1.1 Publically Available Literature

Although the literature on the early stages of the pharmaceutical decision-making process is not extensive, there is some research that has characterized the process. We used this information to help define the components of the model. Some examples are the work performed at the Massachusetts Institute of Technology (MIT) Center for Biomedical Innovation and the Clinical Trials Transformation Initiative (CTTI), a public-private partnership organization hosted by Duke University.4

Our literature search targeted several categories of literature: peer-reviewed articles in scientific journals, unpublished papers and presentations, white papers, gray literature, and news stories and occasional pieces appearing in newspapers and magazines or other print media outlets. Our search methodology featured systematic inquiries of the following databases:

- PubMed for peer-reviewed healthcare and biomedical journals;

- Lexis/Nexis academic for mass media and other periodical publications; and

- PAIS, Scopus, Web of Knowledge, and Embase for gray literature.

The search strategies differed for each category of literature and related database, but each query employed search terms in various combinations using logic strings, such as “clinical trial AND cost*,” “barrier* AND clinical trials,” “phase 1 clinical trial* AND cost*,”etc.

4 CTTI comprises more than 60 organizations from across the clinical trial enterprise. Members include representatives of government agencies (the FDA, Centers for Medicare and Medicaid Services, Office of Human Research Protections, National Institutes of Health (NIH), and other national and international governmental bodies), industry representatives (pharmaceutical, biotechnology, device, and clinical research organizations), patient advocacy groups, professional societies, investigator groups, academic institutions, and other interested parties (Clinical Trials Transformation Initiative (CTTI), 2011).

2.1.2 Discussions with Experts, FDA Personnel, Drug Sponsors, Contract Research Organizations (CROs), and Academic Clinical Research Centers

Some of the information needed to characterize the decision process of a drug sponsor came from semi-structured discussions with our team of experts and other industry experts, FDA personnel, drug sponsors, CROs, and primary clinical research centers, including University of Massachusetts, Johns Hopkins University, University of Michigan, and the Mayo Clinic.

As the decision process varies between pharmaceutical and biotechnology companies as well as small and large firms, we interviewed representatives from these sectors and company sizes. We limited the number of interviews involving the same set of questions to fewer than 10. In total, we interviewed representatives from four small pharmaceutical/biotechnology companies; two large pharmaceutical/biotechnology companies; two CROs; and three independent expert consultants in addition to our team of experts. The expertise of those interviewed covered a wide range of therapeutic areas, including arthritis/pain/inflammatory diseases, cardiology, gastroenterology, immunology, metabolic diseases, ophthalmology, oncology, and infectious diseases. Of the six pharmaceutical/biotechnology companies interviewed, three had only a single U.S. office, while the other three had offices in multiple countries, which, in combination, span six continents.

We first emailed potential participants a cover letter explaining the purpose of the study and encouraging participation in our interviews. Almost everyone contacted for the study agreed to be interviewed. Next, we scheduled interviews with those who responded and were willing to participate. Appendix A presents the protocol used in these interviews. In general, the questions asked in each interview were targeted to the background of the interviewee, but most interviewees were asked about all three topic areas (the clinical trials decision-making process, barriers, and costs).

From these interviews, we collected information about how sponsors make the decision to move forward with the development of a new drug, the significance (in the respondents’ opinion and experience) of various cost components and barriers mentioned in the literature, and the types of changes they would advocate to address what they perceive as the most problematic barriers to conducting clinical trials in the U.S. This information helped us refine our model and was also used to more fully characterize the barriers to clinical trials and develop a list of potential barrier mitigation strategies.

In addition to our interviews with industry representatives, we also spoke with individuals involved in CTTI and the MIT Center for Biomedical Innovation (as noted earlier; specifically, the New Drugs Development Paradigm project). These groups are working to enhance the drug development process through joint research with stakeholder groups in the public and private sectors.

2.1.3 Medidata Solutions Databases

We used three proprietary databases on clinical trial costs, which are offered by Medidata Solutions, a global provider of cloud-based solutions for clinical research in life sciences, as part of the broad set of solutions available through the Medidata Clinical Cloud™:

- Medidata Grants Manager® (PICAS® database) – PICAS provides industry-wide negotiated site cost information. It is a database of negotiated investigator grants—it includes more than 250,000 grants and contracts and 27,000 protocols in over 1,400 indications—that provides benchmarked costs typically used for clinical trial budget planning.

- Medidata CRO Contractor® (CROCAS® database) – The CROCAS database contains thousands of negotiated outsourcing contracts. It includes comprehensive data from CRO contracts—detailed across such dimensions as therapeutic area, phase, and geography.

- Medidata Insights™ – Medidata Insights is the turnkey clinical analytics solution that provides advanced visualization of clinical operational performance metrics alongside company and industry benchmarks. The Insights metrics warehouse is comprised of data from more than 7,000 studies gathered seamlessly from over 120 clinical trial sponsors.

We obtained custom aggregate tabulations from Medidata by therapeutic area, phase, and geography (domestic versus international) for the full range of cost elements associated clinical trials (averages as well as variances). Cost components included cost of IRB approvals, cost of protocols, patient recruitment costs, and administrative staff costs among others. Appendix B provides the Medidata data elements and their descriptions.

2.2 Conceptual Framework

The literature review and discussions described above served to inform the conceptual framework for our model. We modeled the clinical trials decision-making process in the form of a decision tree that looks at the decision process from the point of view of an expected-revenue-maximizing sponsor in the face of uncertainty (or risk).

To illustrate our approach to modeling clinical trial decision-making, we consider a highly simplified example adapted from Damodaran (2007)—the analysis of a New Molecular Entity (NME) for treating a hypothetical Indication X that has gone through preclinical testing and is about to enter Phase 1 clinical trials. Then we assume that we are provided with the following information (we explain the sources for this information in Section 2.4 below):

- Phase 1 trial is expected to cost $30 million and to require 100 participants to determine safety and dosage. The trial is expected to last one year and there is a 67 percent likelihood that the drug will successfully complete the first phase.

- Phase 2 involves testing the NME’s effectiveness in treating Indication X on 250 participants over a period of around two years. This phase is expected to cost $45 million and the agent will need to demonstrate a statistically significant impact on a number of clinical endpoints to move on to the next phase. There is only a 41 percent likelihood that the drug will prove successful in treating Indication X.

- In Phase 3, the testing will be expanded to 4,000 patients. The phase will last four years and cost $210 million, and there is a 55 percent likelihood of success.

- Upon completion of Phase 3, the sponsor will need to submit an NDA to FDA paying a user fee of $2 million and there is an 83 percent likelihood of being approved. The NDA submission decision will take one year.

- Given the size of the patient population and average wholesale price for similar drugs, the net revenue stream for the NME, if it is approved, is estimated at $973 million over 15 years.

- The cost of capital for the sponsor is 15 percent.

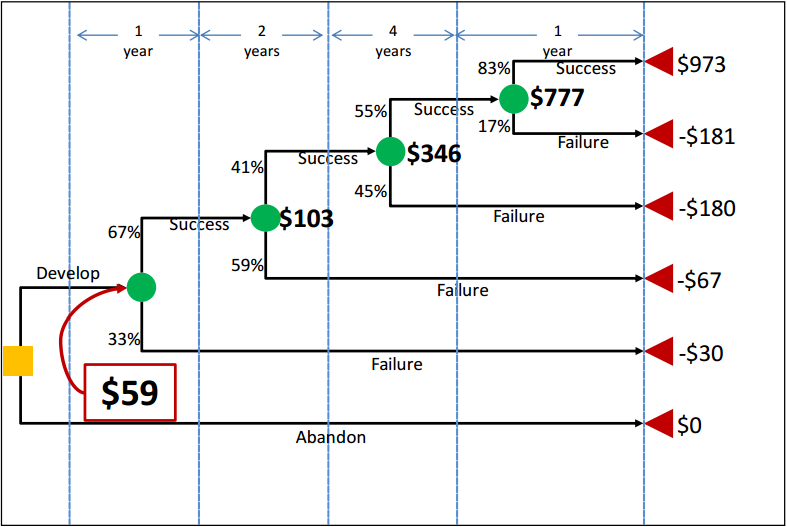

The decision tree for this NME can now be drawn, specifying the phases, the revenue at each phase, and their respective probabilities (see Figure 2). The decision tree depicted shows the likelihood of success at each phase and the marginal returns associated with each step. Since it takes time to go through the different phases of development, there is a time value effect that needs to be built into the expected returns computation for each path. The figure reflects the time value effect and computes the cumulative present value of returns from each path using the 15 percent cost of capital as the sponsor’s internal rate of discount. When time-discounted costs of conducting trials are subtracted from the present value of the returns, we are left with the net present value (NPV) of each possible outcome (Damodaran, 2007).

Figure 2: Drug Development Decision Tree Depicting Net Present Value (NPV) of Returns at Each Node

In Figure 2, the yellow square is the root decision node of interest. It is the point at which the revenue-maximizing sponsor is deciding whether or not to pursue development of the drug. The green circles (event/chance nodes) represent the possibility of success or failure at each phase, with the probabilities associated with each possibility appearing to the left of each branch. Finally, the red triangles are the end nodes. To the right of each end node is the NPV of that outcome to the sponsor. For example, if the drug completed all phases and successfully reached the market, the NPV of the cost and revenue streams would be $973 million in this scenario. By contrast, if the sponsor pushed forward with development but the drug failed at some point, the sponsor would incur the costs of the clinical trials without earning any revenues. Therefore, the other outcome nodes represent negative NPVs.

The dollar values appearing in bold next to the green chance nodes are calculated from right to left across the tree by multiplying the NPVs associated with each outcome by the probabilities of that outcome occurring. These dollar values thus represent the expected NPVs (eNPVs). For example, the eNPV at the start of the NDA/BLA review phase is equal to ($973 million × 83 percent) + (-$181 million × 17 percent), or $777 million. The $777 million can then be used to do the same calculation for the chance node at Phase 3, and so forth until the value at the first chance node can be calculated. This number, $59 million in this example, represents the eNPV to the sponsor of moving forward with the development project at the time when the decision is made to continue or abandon the new drug. This value reflects all of the possibilities that can unfold over time clearly depicting the sub-optimal choices that a revenue-maximizing sponsor should reject. The decision tree also characterizes the full range of outcomes, with the worst case scenario being failure in the NDA/BLA review stage to the best case scenario of FDA approval.

Phase 4 post-marketing studies, as described earlier, do not appear in Figure 2 as part of the decision tree because they do not play a role in determining which branch or outcome node a new drug ends up on in the same way that Phase 1, 2, and 3 trials do. In other words, they take place after the drug is approved (if they take place at all), and the consequences of success/failure in Phase 4 are not within the scope of this model. However, Phase 4 costs, if they occur, can be reflected in the values shown in the tree. The cost of these studies would be discounted back to the start of the project (in the same way all of the other costs are) and included in the branch representing successful completion of all prior phases and approval of the new drug. As Phase 4 studies occur post-approval, no costs associated with Phase 4 would be included on the other branches (on which the drug is not approved).

It is possible to examine the specifics of clinical trial formulation decisions in the context of this framework. For example, the availability of biomarkers for Indication X in the above example can decrease clinical trial costs by reducing the need to recruit large pools of patients and possibly reducing trial duration. Similarly, the use of adaptive designs can yield shorter and less expensive clinical studies.5 Both of these approaches can be evaluated with the use of the above framework by parameterizing (1) clinical trial event nodes so that costs associated with those events are scalable, and (2) clinical trial duration.

The model framework is also amenable to accommodate the changing cost of capital evaluations of the sponsor. For example, in the example scenario described above, it is possible that an NME will be approved for a secondary indication as well as a primary indication. If the drug is used to treat multiple conditions, it may be the case that the sales and expected returns will be more stable than they would be if the drug were only approved for a single indication. To reflect this anticipated increase in stability, the drug sponsor may determine that it is more appropriate to use a lower discount rate than otherwise expected.

Furthermore, in the context of the above basic framework, the barriers can be thought of as those factors that contribute to the cost of each event node and/or those that affect the probability of success. For example, a significant group of barriers to clinical trials are administrative. A study at the VanderbiltIngram Cancer Center and affiliated sites found that 17 to 30 major administrative steps were required to achieve approval of a clinical trial (Dilts & Sandler, 2006). All of these barriers result in increasing the cost of clinical trials, hence reducing the eNPV of drug development from the point of view of the drug sponsor. In the above model, alleviation of such barriers could be captured in the form of reduced clinical trial costs and possibly reduced duration.

5 One topic often discussed with adaptive designs is the use of seamless Phase 2/3 studies. Some Phase 2 studies are similar to subsequent Phase 3 studies. The time between Phase 2 and Phase 3 can be decreased by viewing the Phase 2 study as a segment of the Phase 3 study. Even though this reduces the time to submission, it might also decrease the amount of information that can be gained relative to a complete and detailed Phase 2 program. In general, adaptive designs suffer from this criticism.

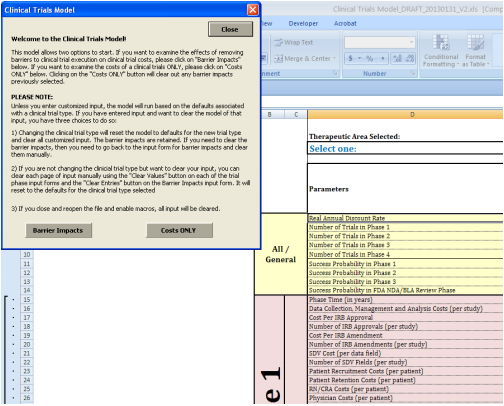

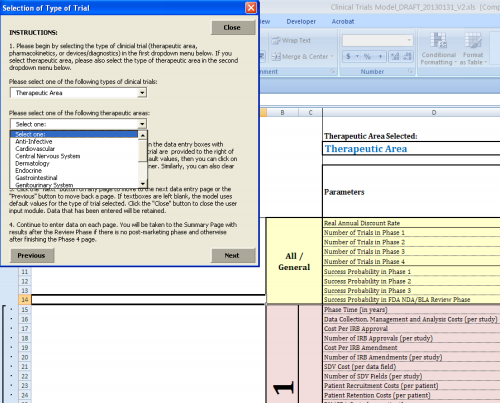

2.3 Operational Model

Although the decision tree analysis format is invaluable in characterizing a range of clinical trial formulation possibilities, the modeling can become extremely complex as the number of event and decision nodes increases. Thus, while the operational model developed allows the user to enter customized cost scenarios in a certain therapeutic area, it does not allow for changes in the number of decision or event nodes.

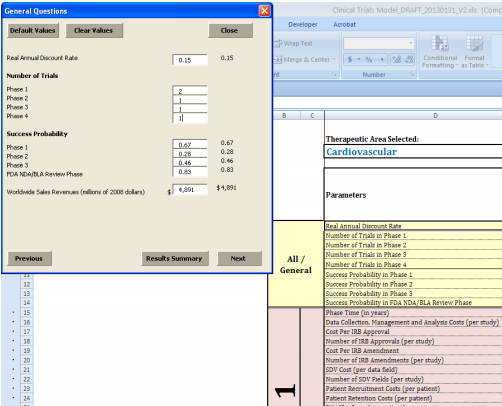

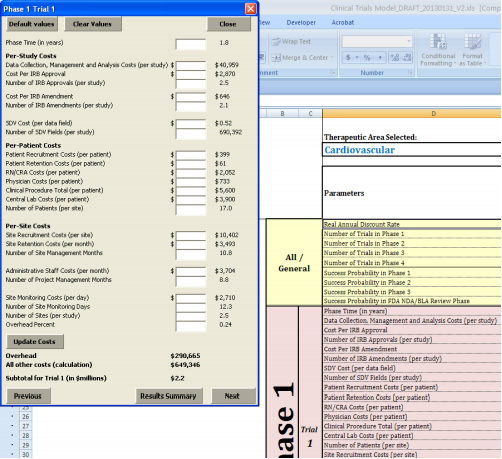

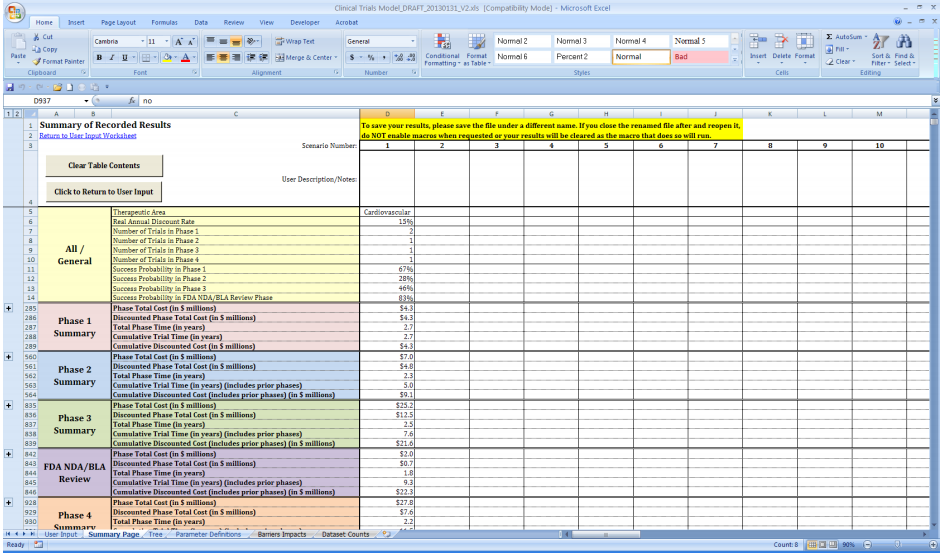

We developed the operational model in Microsoft Excel™ for ease of use and sharing, with a user interface coded in Visual Basic. The model is structured such that the user makes all selections through a guided user form, which enables the user to input project-specific values while the underlying worksheets and cost aggregation formula are protected from editing. The interface is designed to allow the user to compare a “custom” scenario, utilizing the values that he has entered in the user form, to a “default” scenario, which draws on average clinical trial costs and other parameters from the literature and data provided by Medidata Solutions (described in greater detail below). The model also allows for a blend of default and custom values as may be desired by the user. Further details on the uses and features of the model can be found in Appendix C.

2.4 Model Parameters

The clinical trial cost/decision-making model described above requires numerous data points, including phase durations, success probabilities, expected revenues, and a discount rate, as well as a full range of itemized costs associated with clinical trials, broken down by phase and therapeutic area. The model uses a real annual discount rate of 15 percent based on input from interviews conducted with drug sponsors as default, and we were able to obtain some of the other data needed from the available clinical research literature. Phase durations were one such parameter. Though they are not differentiated by therapeutic area, DiMasi, Hansen, & Grabowski (2003) provide mean phase lengths of 21.6 months (1.8 years) for Phase 1, 25.7 months (2.1 years) for Phase 2, and 30.5 months (2.5 years) for Phase 3. The NDA/BLA review time, as we are defining it,6 includes the time from first submission of an NDA/BLA to regulatory marketing approval, and comes from DiMasi, Grabowski, & Vernon (2004). Trial phase times generally do not reflect differences between therapeutic areas; however, therapeutic-area-specific NDA/BLA review times were available and used for a select list of therapeutic areas.

Clinical trial success probabilities are available from two recent studies, one conducted by DiMasi and colleagues (Tufts University) in 2010 (DiMasi, Feldman, Seckler, & Wilson, 2010), and another one conducted by BioMedTracker in 2011(Hay, Rosenthal, Thomas, & Craighead, 2011). The two studies, however, provide different success rate estimates—for example, DiMasi, et al. (2010) found an overall success rate of 19 percent, while Hay and colleagues (2011) arrived at nine percent. The differences in the two studies can be attributable to the fact that they were drawing from different pools of data. DiMasi, et al. (2010) collected data on 1,738 drugs that entered Phase 1 between 1993 and 2004 and were developed by the 50 largest pharmaceutical companies. The BioMedTracker study covered 4,275 drugs from biotechnology and pharmaceutical companies of all sizes. The drugs included were in any phase of development between October 2003 and December 2010 (Hay, Rosenthal, Thomas, & Craighead, 2011).

As the BioMedTracker study was more recent and included more drugs and a broader range of companies, we opted to use the success probabilities reported by BioMedTracker in our model. These success probabilities were broken down by clinical trial phase and, for Phase 2 and Phase 3, by therapeutic area as well. For Phase 1, we used 67 percent for all therapeutic areas. For Phases 2 and 3 and the NDA/BLA review phase, we used therapeutic-area-specific percentages where available and general success probabilities (41, 65, and 83 percent, respectively) for therapeutic areas for which no specific probabilities were reported. All probabilities used in the model were for lead indications.

In order to construct the model’s “baseline scenario,” we obtained itemized clinical trial cost data from Medidata Solutions (hereafter “Medidata”), which compiles data from a portfolio of CRO contracts, investigator grants/contracts, and clinical trial protocols. Medidata Grants Manager’s database, PICAS® , and CRO Contractor’s database, CROCAS® , contain numerous data elements derived from actual negotiated contracts, and these resources are widely used by pharmaceutical companies, CROs, and academic researchers to identify prevailing rates for trial planning, budget development, and grant negotiation (Medidata Solutions, 2012). We obtained the number of clinical investigator sites per study/protocol from Medidata Insights™, based on 7,000 study protocols that allows numerous views of study performance metrics on demand, by therapeutic area, study phase, geography and more.

The custom tabulation received from Medidata contained means and variances for a wide range of clinical trial cost elements, including study-level costs (such as IRB approvals and source data verification (SDV) costs), patient-level costs (such as recruitment and clinical procedure costs), and sitelevel costs (such as monitoring and project management). Number of planned patients per site and number of sites per study were also provided. A complete list of these data elements can be found in Appendix B, along with more detailed descriptions of each field, unit specifications, and sources. The data are from 2004 and later and have not been adjusted for inflation by Medidata. As the data points represent averages across this range of time and cannot be assigned specific years, we were unable to adjust them for inflation, which is one of the study limitations.

Medidata provided means and variances of costs by trial phase (Phases 1 through 4), geographic region (U.S., global, and rest of world), and therapeutic area. For the purposes of this analysis, we focused on the data points specific to U.S. trials. The therapeutic areas for which Medidata provided data were: anti-Infective, cardiovascular, central nervous system, dermatology, devices and diagnostics7 , endocrine, gastrointestinal, genitourinary System, hematology, immunomodulation, oncology, ophthalmology, pain and anesthesia, pharmacokinetics8 , and respiratory system. To the extent possible, we attempted to match the success probabilities by therapeutic area (from BioMedTracker) to the therapeutic area categories used by Medidata. Some additional data cleaning steps were performed using the statistical software STATA; these are outlined in Appendix E.

On the revenue side, we used an estimate from a study by DiMasi, Grabowski, & Vernon, (2004), which reports worldwide sales revenues over the product life cycle for new drugs approved in the United States during the period from 1990 to 1994. Figures were available for some specific indications; for the others, we used the reported figure for “All Drugs.” The numbers reported by DiMasi, Grabowski, & Vernon (2004) are NPVs, discounted at 11 percent to the launch year; however, they are in year 2000 dollars. Therefore, we inflated the revenue figures to 2008 dollars (the midpoint between 2004 and 2012, the range covered by the itemized cost data) using the producer price index for commodities in the category “Drugs and Pharmaceuticals” from the Bureau of Labor Statistics (BLS) (series WPU063).

6 From FDA’s perspective, each submission has a set time period (priority or non-priority review) that does not include time between submissions; however that time is included in our definition of the NDA/BLA review phase time for the purposes of this analysis.

7 The “Devices and Diagnostics” category includes any industry-sponsored studies where a device or drug delivery system is being studied instead of a drug. Among the devices included in this category are stents, implants, joint replacements, inhalers, and blood sugar monitoring devices.

8 Pharmacokinetic (PK) studies are often conducted at the discovery or candidate selection stages of a development program. These studies look at the mechanisms of absorption and distribution of a drug candidate as well as the rate at which a drug action begins and the duration of this effect.

3 Analysis of Costs

We worked closely with Medidata to determine the appropriate methodology for aggregating the itemized costs that characterize the overall cost of a clinical trial. To obtain totals for each individual trial within a given phase, we grouped the cost components into per-study costs, per-patient costs, and per-site costs, where:

- Per-study costs is the sum of:

- Data Collection, Management and Analysis Costs (per study);

- Cost Per Institutional Review Board (IRB) Approval × Number of IRB Approvals (per study);

- Cost Per IRB Amendment × Number of IRB Amendments (per study);

- SDV Cost (per data field) × Number of SDV Fields (per study); and

- The total of all per-site costs listed below, multiplied by Number of Sites (per study);

- Per-site costs is the sum of:

- The total of all per-patient costs listed below, multiplied by Number of Planned Patients (per site);

- Site Recruitment Costs (per site);

- Site Retention Costs (per month) × Number of Site Management Months;

- Administrative Staff Costs (per month) × Number of Project Management Months; and

- Site Monitoring Costs (per day) × Number of Site Monitoring Days;

- Per-patient costs is the sum of:

- Patient Recruitment Costs (per patient);

- Patient Retention Costs (per patient);

- Registered Nurse (RN)/Clinical Research Associate (CRA) Costs (per patient);

- Physician Costs (per patient);

- Clinical Procedure Total (per patient); and

- Central Lab Costs (per patient);

To arrive at a best approximation of the cost total for the trial, two additional costs had to be added in: site overhead and all other additional costs not captured in the itemized categories listed above. We first added site overhead as a percentage of the sum of the above per-study costs (roughly 20 to 27 percent of the above per-study costs as estimated by Medidata). 9 According to Medidata, the computed per-study costs plus the 25 percent site overhead only accounts for approximately 70 percent of total trial costs. Still missing from this total are costs for sponsors to run the study and other costs not captured elsewhere. Thus, we estimated an additional cost category, “All Other Costs” as 30 percent of the sum of computed per-study costs and the 25 percent site overhead to ensure accuracy of our totals.

We applied the cost aggregation methodology outlined above to all trials within Phases 1, 2, 3, and 4. In the operational model developed, if the user specifies that the study will include more than one trial per phase, the cost totals for each trial are summed to get an overall total cost for the phase.

Adding the lengths of time associated with each trial within a phase was somewhat more complex, as there are a range of possibilities. One possibility is that all trials within a given phase are completed concurrently, in which case the total length of time for the phase would be equal to the maximum length of time needed to complete any individual trial within that phase. For example, if there were two Phase 2 trials, and one took 1.5 years, while the other took 2 years, the total length of Phase 2 would be 2 years, assuming the trials were completed at the same time. At the other extreme end of the spectrum, the trials within a phase might be completed sequentially with no overlap, in which case the lengths of time specified would need to be summed to arrive at the total phase length. In the previous example, this would mean that the total length of Phase 2 is 1.5 plus 2, or 3.5 years. To take into account both extremes and all possibilities in between, we assumed that the phase length in years across all trials associated with a given phase is the average of these two measures (the maximum trial length specified and the total of all lengths specified). It should be noted that if only one trial is specified for a given phase in the operational model, this average will simply be equal to the length given for that trial.

The operational model discounts the total costs for each phase back to Year 0 (before Phase 1 trials are started) using the real annual discount rate (15 percent for the default scenario). Further, the model assumes that all costs associated with each phase are incurred at the start of the phase; therefore, Phase 1 costs are not discounted, Phase 2 costs are discounted over the length of Phase 1, Phase 3 costs are discounted over the combined lengths of Phases 1 and 2, and so forth.

While we apply discounting to trial costs in the operational model, the analysis presented below is based on raw (i.e., un-discounted) cost figures. Further, we exclude Devices & Diagnostics as well as Pharmacokinetics categories from the analysis below as these are not within the scope of this study.10

9 Site overhead is not always applied to all costs in a negotiated clinical investigator contract by the clinical site. In some cases, the site may negotiate overhead only on certain portions of the contract such as clinical procedures. Thus, 25 percent of total per-study costs is likely to be an overestimate of actual overhead costs per study.

10 Because the data were available for both categories, we left them in the operational model.

3.1 Costs by Therapeutic Area

Table 1 presents the total costs for each of the therapeutic areas included in our model by clinical trial phase (assuming one trial per phase and not inclusive of failures). From the table, immunomodulation per-study costs ($6.6 million) are the highest in Phase 1 with costs of studies in ophthalmology ($5.3 million) and respiratory system ($5.2 million) ranking second and third, respectively. In Phase 2, hematology trial costs ($19.6 million) rank first, followed by pain and anesthesia ($17.0 million) and immunomodulation ($16.0 million). The most costly Phase 3 studies are in pain and anesthesia ($52.9 million) with studies in ophthalmology ($30.7 million) and cardiovascular ($25.2) area ranking second and third, respectively. In Phase 4, respiratory system trial costs ($72.9 million) rank first, followed by oncology ($38.9 million) and pain and anesthesia ($32.1 million) study costs. Overall, the therapeutic area with the highest clinical research burden across all phases is respiratory system ($115.3 million) followed by pain and anesthesia ($105.4 million) and oncology ($78.6 million) trials (see Figure 3). On the other hand, trials in central nervous system, dermatology, and genitourinary system tend to cost the least overall.

Table 1: Total Per-Study Costs (in $ Millions), by Phase and Therapeutic Area [a] [b]

| Therapeutic Area | Phase 1 | Phase 2 | Phase 3 | Phase 1, 2, & 3 Subtotal [d] | FDA NDA/BLA Review Phase [c] | Phase 4 | Total [d] |

|---|---|---|---|---|---|---|---|

| Anti-Infective | $4.2 (5) | $14.2 (6) | $22.8 (5) | $41.2 (3) | $2.0 | $11.0 (12) | $54.2 (10) |

| Cardiovascular | $2.2 (9) | $7.0 (13) | $25.2 (3) | $34.4 (10) | $2.0 | $27.8 (4) | $64.1 (6) |

| Central Nervous System | $3.9 (6) | $13.9 (7) | $19.2 (7) | $37.0 (6) | $2.0 | $14.1 (11) | $53.1 (11) |

| Dermatology | $1.8 (10) | $8.9 (12) | $11.5 (13) | $22.2 (13) | $2.0 | $25.2 (7) | $49.3 (12) |

| Endocrine | $1.4 (12) | $12.1 (10) | $17.0 (9) | $30.5 (12) | $2.0 | $26.7 (6) | $59.1 (7) |

| Gastrointestinal | $2.4 (8) | $15.8 (4) | $14.5 (11) | $32.7 (11) | $2.0 | $21.8 (8) | $56.4 (8) |

| Genitourinary System | $3.1 (7) | $14.6 (5) | $17.5 (8) | $35.2 (8) | $2.0 | $6.8 (13) | $44.0 (13) |

| Hematology | $1.7 (11) | $19.6 (1) | $15.0 (10) | $36.3 (7) | $2.0 | $27.0 (5) | $65.2 (5) |

| Immunomodulation | $6.6 (1) | $16.0 (3) | $11.9 (12) | $34.5 (9) | $2.0 | $19.8 (9) | $56.2 (9) |

| Oncology | $4.5 (4) | $11.2 (11) | $22.1 (6) | $37.8 (5) | $2.0 | $38.9 (2) | $78.6 (3) |

| Ophthalmology | $5.3 (2) | $13.8 (8) | $30.7 (2) | $49.8 (2) | $2.0 | $17.6 (10) | $69.4 (4) |

| Pain and Anesthesia | $1.4 (13) | $17.0 (2) | $52.9 (1) | $71.3 (1) | $2.0 | $32.1 (3) | $105.4 (2) |

| Respiratory System | $5.2 (3) | $12.2 (9) | $23.1 (4) | $40.5 (4) | $2.0 | $72.9 (1) | $115.3 (1) |

[a] The numbers in parentheses represent the rank in descending order.

[b] The cost for each phase assumes that a single trial (i.e., study) is conducted.

[c] The category represents the New Drug Application (NDA)/Biologic License Application (BLA) filing fee for an application requiring clinical data and does not include any establishment or product fees that the filing entity might need to pay in addition.

[d] Totals may not add up due to rounding.

Figure 3: Clinical Trial Costs (in $ Millions) by Phase and Therapeutic Area

As can be observed from Table 1, Phase 2 costs are lower than Phase 3 costs for all but three therapeutic areas: gastrointestinal, hematology, and immunomodulation. This somewhat counterintuitive relationship is due to a variety of factors, including higher data collection costs, administrative staff costs, and site recruitment costs in Phase 2 than in Phase 3 for these therapeutic areas.

3.2 Costs by Trial Phase

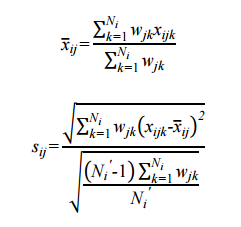

To compare average costs by phase across all therapeutic areas, we computed a weighted mean cost,  , and its weighted standard deviation, sij , , for each cost component, i, and clinical trial phase, j, where the weights are the total number of contracts (i.e., sum of investigator and contractor contracts contributing to the PICAS® and CROCAS® datasets11) such that

, and its weighted standard deviation, sij , , for each cost component, i, and clinical trial phase, j, where the weights are the total number of contracts (i.e., sum of investigator and contractor contracts contributing to the PICAS® and CROCAS® datasets11) such that

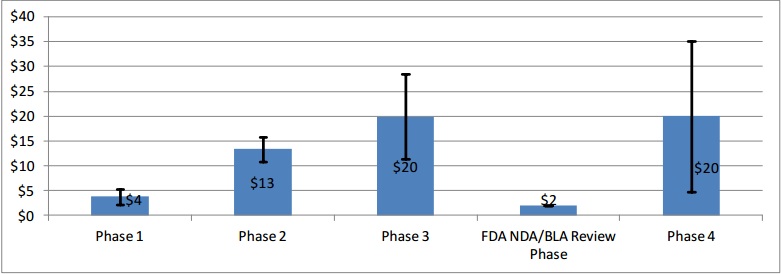

where wjk is the total number of contracts available for the phase and therapeutic area, k, combination; xijk is the reported mean for cost component i, clinical trial phase j, and therapeutic area k; is the simple average of cost component i for that phase j across all therapeutic areas;Ni is the number of therapeutic areas that are associated with the phase in question; and Ni' is the number of non-zero weights. As one would expect, the average per-study costs across all therapeutic areas increase as clinical development proceeds from Phase 1 to Phases 2 and 3 (see Figure 4).

is the simple average of cost component i for that phase j across all therapeutic areas;Ni is the number of therapeutic areas that are associated with the phase in question; and Ni' is the number of non-zero weights. As one would expect, the average per-study costs across all therapeutic areas increase as clinical development proceeds from Phase 1 to Phases 2 and 3 (see Figure 4).

Figure 4: Average Per-Study Costs by Phase (in $ Millions) Across Therapeutic Areas

Note: The error bars represent one standard deviation below and above the mean.

While average Phase 4 study costs are equivalent to those of Phase 3, there is high degree of variability in Phase 4 costs across the different therapeutic areas.

11 The number of contracts by therapeutic area and trial phase cannot be publicly reported because they are confidential and proprietary.

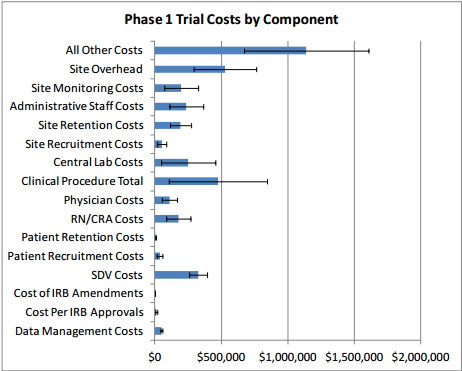

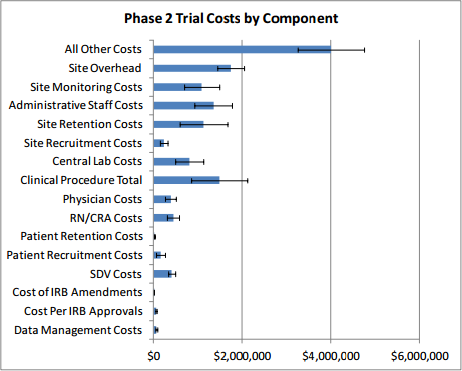

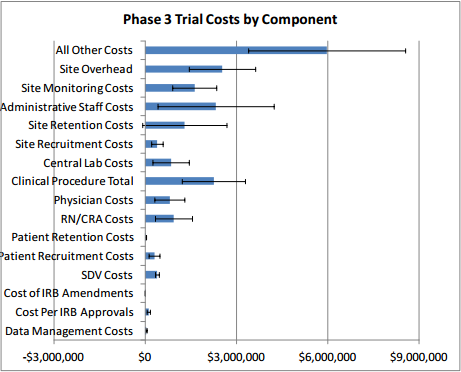

3.3 Costs by Cost Component

Table 2 presents clinical trial costs by cost component across all therapeutic areas by trial phase. Similar to our evaluation of costs by trial phase discussed in Section 3, when averaging costs across therapeutic areas, we weighted the data by the number of contracts available by therapeutic area. Excluding the All Other Costs and Site Overhead cost components as these are extrapolated numbers, in Phase 1, Clinical Procedure Costs ($476,000) are the highest, followed by SDV costs ($326,000) and Central Laboratory Costs ($252,000). In Phase 2, expenditures that contribute the most to overall costs in descending order include Clinical Procedure Costs ($1.5 million), Administrative Staff Costs ($1.3 million), Site Retention Costs ($1.1 million), Site Monitoring Costs ($1.1 million), Central Laboratory Costs ($804,000), and RN/CRA Costs ($441,000). Even though they are still sizable and higher in absolute terms than those in Phase 1, SDV Costs only constitute three percent (= $406,038 ÷ $13.35 million) of total per-study Phase 2 costs whereas in Phase 1 their share of total per-study costs is around nine percent (= $326,437 ÷ $3.80 million). Similar to Phase 2, Clinical Procedure Costs ($2.3 million), Administrative Staff Costs ($2.3 million), Site Retention Costs ($1.3 million), Site Monitoring Costs ($1.6 million), Central Laboratory Costs ($849,000), and RN/CRA Costs ($940,000) contribute the most to overall per-study Phase 3 costs. In Phase 4, Administrative Staff Costs ($3.3 million) rank the highest, followed by Site Retention Costs ($1.8 million), and Clinical Procedure Costs ($1.7 million). While not insignificant in dollar terms, Patient Recruitment Costs only account for 1.7 to 2.7 percent of overall costs across different clinical trial phases.

Table 2: Clinical Trial Costs, by Cost Component, Phase, and Therapeutic Area [a] [b]

| Cost Component | Phase 1 | Phase 2 | Phase 3 | Phase 4 | ||||

|---|---|---|---|---|---|---|---|---|

| $ | % of Subtotal | $ | % of Subtotal | $ | % of Subtotal | $ | % of Subtotal | |

| Data Management Costs | $50,331 ($8,467) | 2.36% | $59,934 ($21,060) | 0.79% | $39,047 ($19,416) | 0.34% | $49,702 ($9,489) | 0.44% |

| Cost Per IRB Approvals | $11,962 ($6,305) | 0.56% | $60,188 ($16,092) | 0.79% | $114,118 ($46,404) | 1.00% | $137,813 ($112,543) | 1.21% |

| Cost of IRB Amendments | $1,094 ($255) | 0.05% | $1,698 ($447) | 0.02% | $1,919 ($277) | 0.02% | $1,636 ($302) | 0.01% |

| SDV Costs | $326,437 ($65,659) | 15.32% | $406,038 ($80,573) | 5.34% | $400,173 ($66,429) | 3.52% | $353,602 ($62,942) | 3.10% |

| Patient Recruitment Costs | $37,050 ($21,666) | 1.74% | $161,140 ($102,066) | 2.12% | $308,672 ($174,702) | 2.71% | $298,923 ($252,042) | 2.62% |

| Patient Retention Costs | $6,145 ($4,745) | 0.29% | $15,439 ($6,970) | 0.20% | $24,727 ($15,868) | 0.22% | $30,568 ($40,466) | 0.27% |

| RN/CRA Costs | $178,237 ($90,473) | 8.36% | $441,053 ($140,390) | 5.80% | $939,540 ($614,943) | 8.25% | $820,775 ($880,644) | 7.20% |

| Physician Costs | $109,681 ($57,626) | 5.15% | $381,968 ($117,217) | 5.03% | $805,508 ($499,426) | 7.08% | $669,464 ($402,072) | 5.88% |

| Clinical Procedure Total | $475,667 ($371,586) | 22.32% | $1,476,368 ($633,448) | 19.43% | $2,252,208 ($1,033,618) | 19.79% | $1,733,576 ($2,251,401) | 15.22% |

| Central Lab Costs [d] | $252,163 ($203,342) | 11.83% | $804,821 ($313,577) | 10.59% | $849,180 ($600,134) | 7.46% | $419,758 ($377,823) | 3.68% |

| Site Recruitment Costs | $51,904 ($32,814) | 2.44% | $233,729 ($83,799) | 3.08% | $395,182 ($195,983) | 3.47% | $168,343 ($101,311) | 1.48% |

| Site Retention Costs | $193,615 ($79,974) | 9.09% | $1,127,005 ($544,068) | 14.83% | $1,305,361 ($1,382,296) | 11.47% | $1,835,341 ($1,335,892) | 16.11% |

| Administrative Staff Costs | $237,869 ($128,547) | 11.16% | $1,347,390 ($427,859) | 17.73% | $2,321,628 ($1,910,047) | 20.40% | $3,323,081 ($2,534,406) | 29.17% |

| Site Monitoring Costs | $198,896 ($128,142) | 9.33% | $1,083,186 ($392,798) | 14.25% | $1,624,874 ($717,034) | 14.28% | $1,549,761 ($979,371) | 13.60% |

| Subtotal (in $ Million) | $2.13 ($0.86) | 100% | $7.60 ($1.46) | 100% | $11.38 ($4.93) | 100% | $11.39 ($8.53) | 100% |

| Site Overhead [c] | $528,685 ($235,862) | NA | $1,741,811 ($302,049) | NA | $2,541,313 ($1,091,082) | NA | $2,575,007 ($2,082,161) | NA |

| All Other Costs [c] | $1,139,887 ($468,077) | NA | $4,003,615 ($752,108) | NA | $5,967,193 ($2,577,692) | NA | $5,986,008 ($4,543,505) | NA |

| Total (in $ Million) | $3.80 ($1.56) | NA | $13.35 ($2.51) | NA | $19.89 ($8.59) | NA | $19.95 ($15.15) | NA |

NA = Not applicable. Note that the reported numbers represent weighted average costs and standard deviations.

[a] The numbers in parentheses represent standard deviations. [b] The cost for each phase assumes that a single trial (i.e., study) is conducted. [c] These are extrapolated figures based on those cost components for which estimates were available from Medidata. [d] Please note that Phase 1 study sites tend to have inhouse or local labs as opposed to central labs.

3.4 Conclusions

Our study suggests that therapeutic area as well as number and types of clinical procedures involved are the key drivers of costs in Phase 1 through Phase 4 studies. The therapeutic areas with the highest per-study costs in Phase 1 is immunomodulation ($6.6 million), in Phase 2 is hematology ($19.6 million), in Phase 3 is pain and anesthesia ($52.9 million), and in Phase 4 is respiratory system ($72.9 million). Figure 5 presents an overview of the different types of costs constituting each phase and their magnitudes. The denoted error bars represent one standard deviation below and above the mean value.

Figure 5: Per-study Costs across All Therapeutic Areas, by Cost Component and Phase

Excluding site overhead costs and costs for sponsors to monitor the study, the top cost drivers of clinical trial expenditures across all study phases are Clinical Procedure (15 to 22 percent), Administrative Staff (11 to 29 percent), Site Monitoring (nine to 14 percent), Site Retention (nine to 16 percent), and Central Laboratory (four to 12 percent) costs (see Table 2 above).

4 Barriers to Clinical Trials

Broadly speaking, the major obstacles to conducting clinical trials in the United States identified through this research include: high financial cost, the lengthy time frames, difficulties in recruitment and retention of participants, insufficiencies in the clinical research workforce, drug sponsor-imposed barriers; regulatory and administrative barriers, the disconnect between clinical research and medical care, and barriers related to the globalization of clinical research. We discuss each of these in further detail below.

4.1 High Financial Cost

The largest barrier to conducting clinical research—and the one into which most other barriers feed—is the high cost. Studies estimate that it now costs somewhere between $161 million and $2 billion to bring a new drug to market (DiMasi, Hansen, & Grabowski, 2003; Adams & Brantner, 2006; Morgan, Grootendorst, Lexchin, Cunningham, & Greyson, 2011). One particularly well-known and often-cited paper by DiMasi, Hansen, & Grabowski (2003) arrives at a total pre-approval cost estimate of $802 million in 2000 dollars to develop a single drug (inflated to 2012 dollars, this estimate is $1.07 billion) (DiMasi, Hansen, & Grabowski, 2003; U.S. Bureau of Labor Statistics, 2012). More recent estimates of drug development costs are around $1.3 billion to $1.7 billion (Collier, 2009). It is important to note that the DiMasi, Hansen, & Grabowski (2003) estimate and many others in the literature represent fully capitalized costs and are inclusive of failures.

The DiMasi, Hansen, & Grabowski (2003) research on this topic is sponsored by the Tufts Center for the Study of Drug Development (CSDD) and has been the subject of much debate among researchers. Light & Warburton (2005) contend that the $802 million figure is far too high due to “problems with the data and sampling,” specifically small sample size, differences in cost allocation methods over time and across companies, upward biases in industry-reported costs, the types of drugs included, and failure to adjust for government subsidies or tax deductions/credits. Light and Warburton (2005) are also critical of the authors’ use of proprietary and confidential data which precludes independent verification (Light & Warburton, 2005). DiMasi et al. (2003) address these concerns in replies, stating that the accuracy of their results is bolstered by cross-checks against other sources and validation by the U.S. Office of Technology Assessment (DiMasi, Hansen, & Grabowski, 2005). Adams & Brantner (2006) also sought to replicate the findings of DiMasi, et al. (2003) using publicly available data. They arrived at a cost estimate of $868 million, suggesting that $802 million might actually be an underestimate. The authors caution, however, that estimated costs vary widely, depending on drug type, therapeutic area, regulatory policies, and strategic decision-making by drug sponsors. Thus, policymakers should be careful about using a single number to characterize drug development costs (Adams & Brantner, 2006).

Although experts debate the accuracy of various cost estimates, there is widespread agreement that clinical trial costs are substantial and rising. According to a 2007 article, the average cost of developing a drug had risen at a rate 7.4 percent higher than inflation over the past two decades, mostly due to rising clinical trial costs (Collier, 2009). Costs also tend to increase as a drug progresses through each phase of the pipeline, and, as the Institute of Medicine (IOM) notes, Phase 3 clinical trials have become “extraordinarily expensive” (English, Lebovitz, & Giffin, 2010). DiMasi, Hansen, & Grabowski (2003) report that the mean costs per investigational drug entering a phase are $15.2 million for Phase 1, $23.5 million for Phase 2, and $86.3 million for Phase 3. Using publicly available data and a larger sample size than DiMasi, et al., (2003), Adams & Brantner (2010) estimate the average expenditure per drug in human clinical trials at around $27 million per year, with $17 million per year on drugs in Phase 1, $34 million per year on drugs in Phase 2, and $27 million per year on drugs in Phase 3 of the trials. Note that DiMasi, et al. (2003) present costs for the average drug over the entire length of each phase, while Adams & Brantner (2010) present expenditures for one year. Multiplying the latter by average phase durations yields estimates of $24 million, $86 million, and $61 million for Phases 1, 2, and 3, respectively (Adams & Brantner, Spending on new drug development, 2010).

While the reasons for these high costs are manifold, a few key macro-level trends stand out. One contributing factor is the productivity of the drug industry in past years. High levels of investment in research and development have yielded so many drugs that companies are now finding it difficult to develop truly innovative pharmaceuticals. As a result, most new drugs are actually just variations of existing drugs, intended to be only incrementally more effective or safer than those already on the market. Detection of such small, incremental improvements requires studies with large numbers of patients (Collier, 2009), and with greater numbers of participants comes greater expenditure on recruitment efforts, data collection, compliance with administrative requirements, and other trial components.

In addition, there has been a shift in the biopharmaceutical industry toward chronic and degenerative disease research, which, given the aging of a large segment of the population, has the potential to secure steady and sizeable revenue streams for companies who can capture a share of these markets (Collier, 2009; DiMasi, Hansen, & Grabowski, 2003). On the other hand, however, clinical trials for these chronic conditions (such as arthritis, dementia, and cardiac diseases) tend to involve complex and expensive testing, large numbers of patients, and long timeframes, as extended drug exposure is required in order to identify potential long-term effects. Multiplying these long-term data requirements by large numbers of patients yields enormous volumes of data that must be collected, processed, analyzed, and reported, all at great cost to the sponsor.